Cloud vs. On-Premise: Where to Deploy Your AI Applications

date

Jul 27, 2023

slug

cloud-vs-prem

status

Published

tags

Infrastructure

summary

Risk Assessment of Cloud vs On-Premise

type

Post

Cloud vs. On-Premise: Where to Deploy Your AI Applications

I’m about to provide you with the information you need to make a profitable low-risk assessment on whether to choose Cloud or On Premise AI.

At APAC AI, our partnership with AWS and Nvidia has provided us with unparalleled access to the world’s most powerful GPUs and we’re also working hand in hand with on premise datacenters to train any of our hundreds of various multi-modality models.

We have made thousands of mistakes and we want to spare you the pain.

If you’d like to learn more about APAC AI, The Automated Public Assistance Company and how we can help you lower the cost of maintaining your AI app, click below or email me at kye@apac.ai.

GitHub - kyegomez/Transcend: Transcend is a premium AI consulting package designed to help small…Transcend is a premium AI consulting package designed to help small businesses and enterprises create custom-tailored…github.com

GitHub - kyegomez/APACAI: The APAC AI Hub for Documents, Product Briefs, Plans, and SOPS, we're…The APAC AI Hub for Documents, Product Briefs, Plans, and SOPS, we're currently raising SAFE 100M$ at a 1Billion$…github.com

AI applications are no longer exclusive to tech giants like Facebook or Google.

Increased accessibility to storage technologies and GPUs is enabling the masses to access AI capabilities, like machine learning and robotic process automation, with the swipe of a credit card.

But where exactly should your organization store the massive amount of data often needed for AI initiatives?

As organizations of all sizes begin exploring AI applications, cloud vs. on-premise deployment will become a pivotal issue for IT service providers and other channel partners.

The question that every company must ask as they tackle AI is “where do we fall on the AI continuum?”

Answering this question can help you determine whether you’ll require on-prem data center infrastructure to support AI needs or whether consuming prebuilt models in the cloud can help you reach your objective.

When it comes to choosing between cloud and on-premise AI, it’s critical to understand the risks.

According to a report by 451 Research, 90% of companies use cloud services in some form.

However, the same report found that 60% of workloads are still run on-premise, indicating a balance between the two.

In terms of costs, cloud services can range from a few hundred dollars per month for small-scale projects to tens of thousands of dollars per month for large-scale projects.

On the other hand, on-premise solutions can have an upfront cost of several thousand dollars for the necessary hardware, with additional ongoing costs for maintenance and updates.

GPU Clusters for AI

When it comes to AI, the choice of GPU can significantly impact the performance and efficiency of your models.

NVIDIA’s A100, H100, T4, and V100 GPUs are among the top choices for AI workloads due to their high computational power and efficiency.

NVIDIA A100 Tensor Core GPU

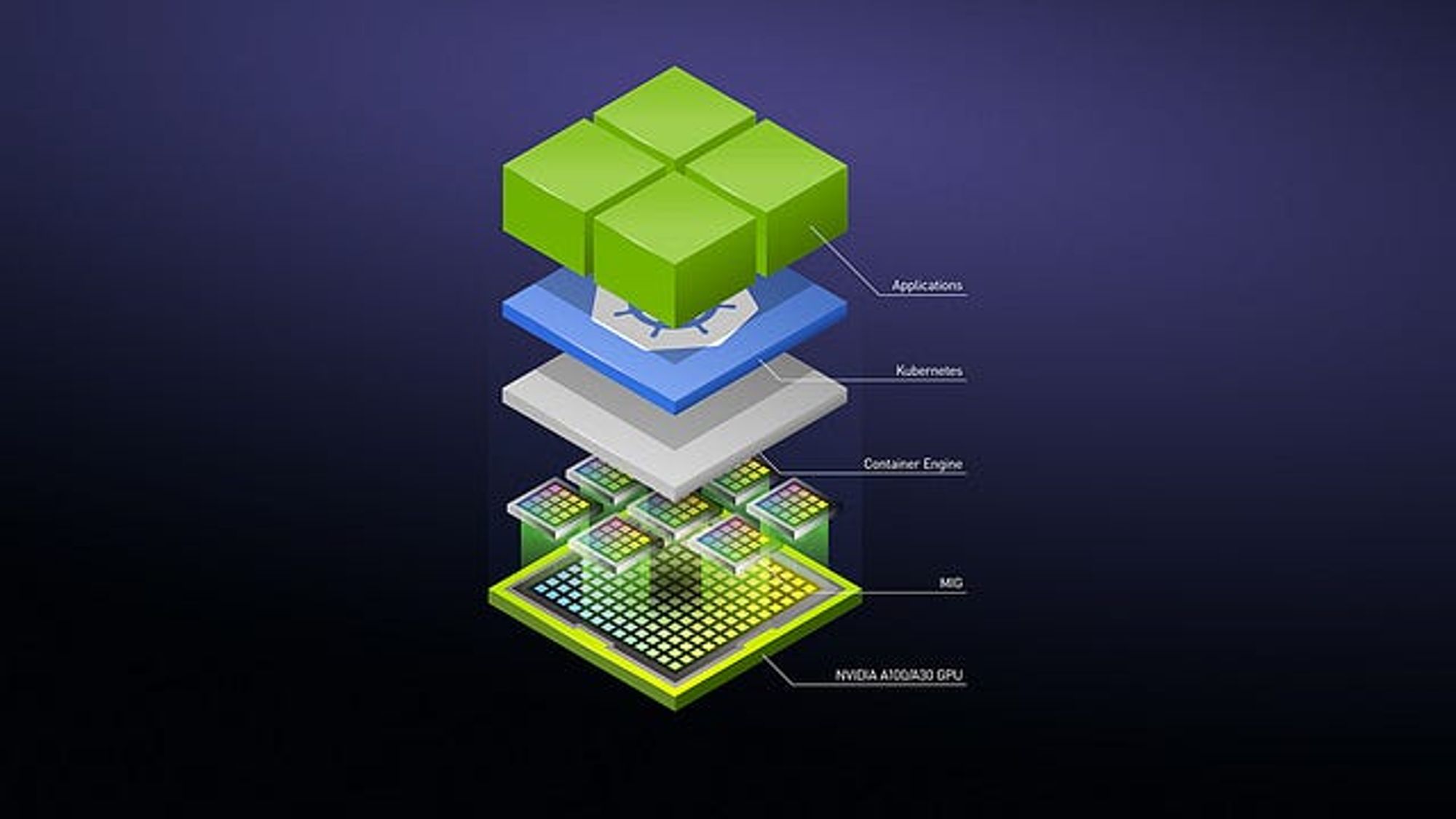

The NVIDIA A100 Tensor Core GPU is designed for demanding AI and high-performance computing workloads.

It can efficiently scale to thousands of GPUs or be partitioned into seven GPU instances to accelerate workloads of all sizes.

The cost of an A100 GPU can range from $10,000 to $20,000 depending on the configuration of GPU RAM.

NVIDIA H100 Tensor Core GPU

The NVIDIA H100 Tensor Core GPU is the world’s most powerful data center GPU for AI, HPC, and data analytics.

It delivers a giant leap in application performance and is designed to handle a broad range of compute-intensive workloads in data centers.

The cost of an H100 GPU is more than $40,000 per unit.

NVIDIA T4 and V100 GPUs

For smaller cluster setups, the NVIDIA T4 and V100 GPUs are excellent choices.

The NVIDIA T4 is a universal deep learning accelerator ideal for distributed computing environments and costs around $2,000.

Meanwhile, the NVIDIA V100 is one of the most advanced data center GPU ever built to accelerate AI, HPC, and graphics, with a price tag of around $8,000 to $10,000..

Cloud vs. On-Premise Hosting for AI Applications

The differences between cloud and on-premise hosting are often compared to renting vs. buying a home.

Cloud hosting is a lot like renting; the stay of AI applications is as long as the contract terms dictate.

Furthermore, the maintenance of the hardware is the responsibility of the hosting provider.

On-premise hosting, on the other hand, is like buying a home; the application can stay on the hardware as long as business requires it. But deciding between the two depends on a few factors:

Cloud vs. On-Premise Hosting for AI Applications

On-premise deployment of AI applications would eliminate the need for renewal of contract terms, which will reduce usage costs.

However, in-house data center maintenance costs could increase as a result.

While experimenting with AI, choosing the pay as you go options would save costs.

However, maintenance costs can increase over time.

Scalability

On-premise hosting offers complete control over the hardware, which means that the administrators of a company can tightly control updates.

But on-premise hosting does require advanced planning to scale hardware.

This is because it requires time to gather the necessary data for updating it.

Cloud resources can be rapidly adjusted to accommodate specific demands and increase the scalability of hardware.

With cloud services, there is too much software clutter within the hardware stacks that reduces the scalability.

Security

Full control over data stored on enterprise premises; no third party has access to data unless hacked.Hosting providers must keep their systems updated and data encrypted to avoid breaches.

Still, your company can’t be sure where your data is stored and how often it is backed up; data is also accessible by third parties.

Data Gravity

Considering the costs of training neural networks with massive datasets for data transfer, companies may want to deploy their AI applications on-premises if the data is available there.

If the data required to build AI applications resides on the cloud, then it’s best to deploy applications there.

- The location of the largest source of data for an enterprise determines the location of its most critical applications, as explained by the concept of ‘data gravity’.

- Data gravity is the ability of data to attract applications, services, and other data towards itself. It is among the most important factors to be considered while choosing between cloud and on-premise platforms.

The Case for Cloud-Based AI Applications

Cloud-based AI services are an ideal solution for many organizations. Instead of building out a massive data center to gain access to compute, you can use the infrastructure someone else already maintains.

In fact, one reason why AI has become so pervasive is cloud providers offering plug-and-play AI cloud services, as well as access to enough compute power and pre-trained models to launch AI applications.

This significantly reduces the barriers to entry.

But be aware, in many cases the pre-trained models or storage requirements of the cloud can be cost-prohibitive; higher GPU counts get expensive fast and training large datasets on the public cloud can be too slow.

Still, the cloud can often be the best option in terms of “testing the waters” of AI and experimenting with which AI initiatives work best for an organization.

The Case for On-Premise AI

So then what moves customers on-premise?

There’s a whole ecosystem of tools built for on-premise infrastructure that can work with mass amounts of compute power–which can be very expensive in the cloud.

Thus, some IT directors find it more economical to do this on-premise or prefer a capital expense to an operational expense model.

Furthermore, if your organization determines that it wants to get more involved in this or roll-out AI at scale, then it may make more sense to invest in on-premises infrastructure instead of consuming cloud-based services.

Challenges to Implementing AI

As you evaluate how to implement AI initiatives, it’s important to remember that much of the data driving AI is actually siloed in legacy infrastructure and is not necessarily in the right format nor is it easily accessible.

What’s more, there’s a massive amount of unstructured data to be processed!

And in many cases, that data has grown beyond a company’s infrastructure.

Dealing with much larger data sets requires more difficult computation and algorithms; the truth is, most of your time could be spent cleaning data, de-identifying it, and getting it to a point where it can be used to gather insights.

And once you do decide on a place to deploy AI applications, it can also be a challenge to give engineers and data scientists access to that data.

However, if there’s one thing that is certain, it’s that AI will be driving innovation and competitive differentiation for years to come.

So deciding on the location of infrastructure for training and running a neural network for AI is a very big decision that should be made with a holistic view of requirements and economics.

Cloud Or On-Prem?

“Everyone knows” the cloud is the least expensive way to host AI development and production, right?

Well, it turns out that the best solution may depend on where you are on your AI journey, how intensively you will be building out your AI capabilities, and what your end-game looks like.

On-Premise vs. Cloud Costs

When considering on-premise vs. cloud costs, it’s important to factor in not only the upfront costs of the hardware but also the ongoing costs of power, cooling, maintenance, and upgrades.

For example, a single NVIDIA A100 GPU can cost up to $20,000, and a server with eight A100 GPUs can cost upwards of $200,000.

On top of this, there are the ongoing costs of power and cooling, which can add up to several thousand dollars per year.

In contrast, cloud providers like AWS, Google Cloud, and Azure offer GPU instances on a pay-as-you-go basis.

For example, an AWS p3.16xlarge instance with eight NVIDIA V100 GPUs costs around $24.48 per hour, which equates to approximately $214,000 per year if run continuously.

However, this cost includes not only the use of the GPUs but also the associated storage, networking, power, cooling, and maintenance.

Therefore, while the upfront costs of on-premise GPUs can be higher, they may be more cost-effective in the long run, especially for organizations with heavy, continuous AI workloads.

On the other hand, cloud GPUs can provide more flexibility and are ideal for organizations with variable workloads or those looking to experiment with AI without a large upfront investment.

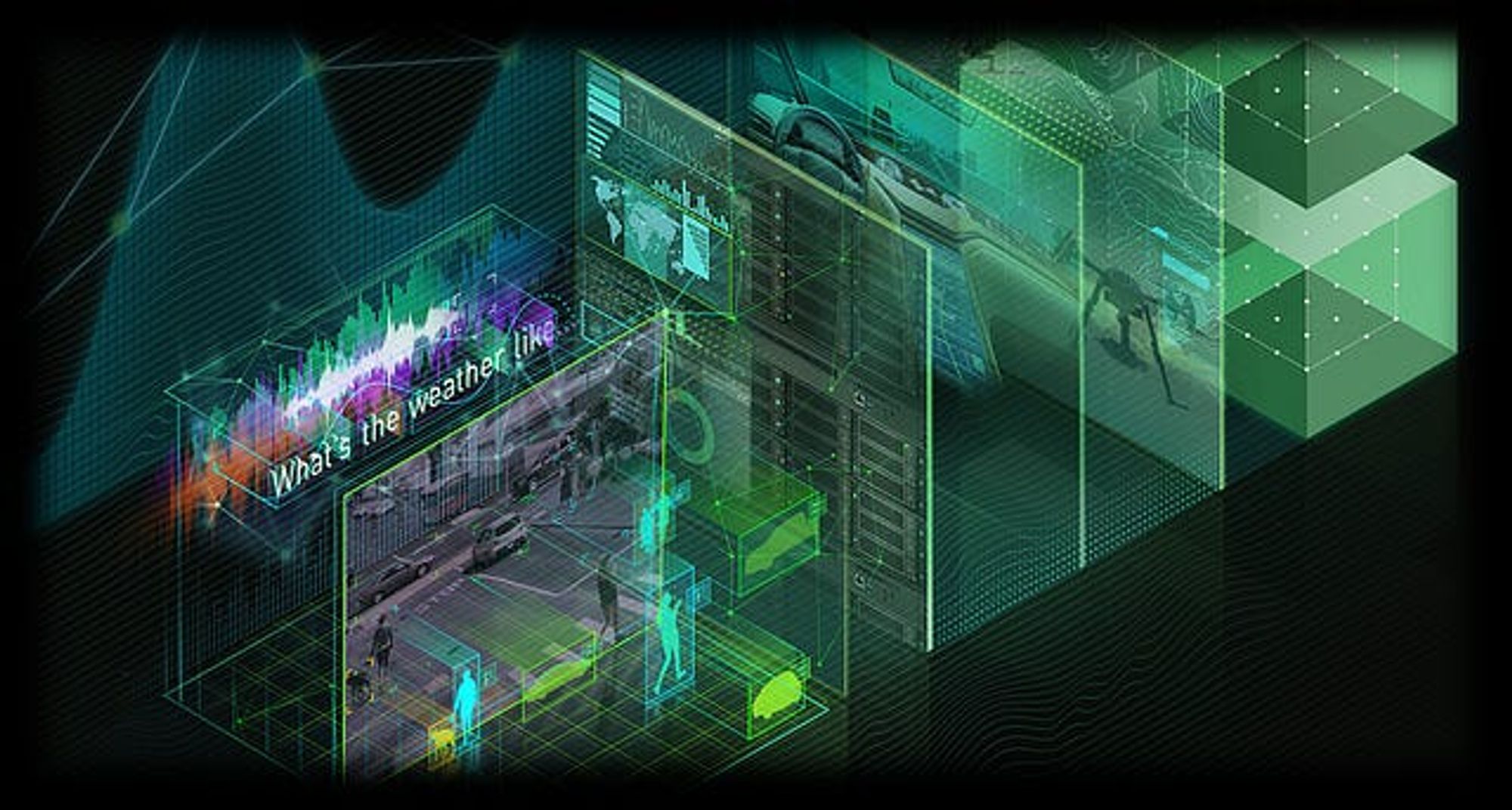

Why cloud computing is so attractive for AI

Cloud service providers (CSPs) have extensive portfolios of development tools and pre-trained deep neural networks for voice, text, image, and translation processing.

Much of this work stems from the internal development of AI for in-house applications, so it is pretty robust.

Microsoft Azure, for example, offers around 30 pre-trained networks and tools which can be accessed by your cloud-hosted application as APIs.

Many models can even be customized with users’ own data, such as specific vocabulary or images.

Amazon SageMaker provides cradle-to-production AI development tools, and AWS offers easy chatbot, image recognition, and translation extensions to AWS-hosted applications.

Google has a pretty amazing slew of tools as well.

Most notably, perhaps, is its AutoML, which builds Deep Learning neural networks auto-magically, saving weeks or months of labor in some cases.

All of these tools have a few things in common.

First, they make building AI applications seem enticingly easy.

Since most companies struggle to find the right skills to staff an AI project, this is very attractive.

Second, they offer ease of use, promising click-and-go simplicity in a field full of relatively obscure technology.

Lastly, all these services have a catch — for the most part, they require that the application you develop in their cloud runs in their cloud.

The fur-lined trap

These services are therefore tremendously sticky.

If you use Amazon Poly to develop chatbots, for example, you can never move that app off of AWS.

If you use Microsoft’s pre-trained DNNs for image processing, you cannot easily run the resulting application on your own servers.

You will probably never see a Google TPU in a non-Google data center, nor be able to use the Google AutoML tool if you later decide to self-host the development process.

Now, stickiness, in and of itself, is not necessarily a bad thing — right?

After all, elastic cloud services can offer a flexible hardware infrastructure for AI, complete with state-of-the-art GPUs or FPGAs to accelerate the training process and handle the flood of inference processing you hope to attract to your new AI (where the trained neural network is actually used for real work or play).

You don’t have to deal with complex hardware configuration and purchase decisions, and the AI software stacks and development frameworks are all ready-to-go.

For these reasons, many AI startups begin their development work in the cloud, and then move to their own infrastructure for production.

Here’s the catch: a lot of AI development, especially training deep neural networks, eventually demands massive computation.

Furthermore, you don’t stop training a (useful) network; you need to keep it fresh with new data and features, or perhaps build a completely new network to improve accuracy using new algorithms as they come out.

From my personal experience of training thousands of models it can become pretty expensive in the cloud, costing 2–3 times as much as building your own private cloud to train and run the neural nets.

Note that one can reserve dedicated GPUs for longer periods in the cloud, significantly lowering the costs, but, from what I’ve seen, owning your own hardware remains the lowest cost for ongoing, GPU-heavy workloads but again it comes with overhead maintenance.

As I will explore in the next section, there are other, additional reasons for cloud or self-hosting.

Conclusion

The location of infrastructure for training and running a neural network for Artificial Intelligence is a very big decision that should be made with a holistic view of requirements and economics.

The cost side of the equation may warrant your own careful TCO analysis.

Many hardware and cloud vendors have TCO models they can share, but, of course, they all have an axe to grind — be prepared to do the homework yourself.

The problem here is that it is challenging to size the required infrastructure (number of servers, number of GPUs, type of storage, etc.) until you are pretty far down the development path.

A good hardware vendor can help here with services — some of which can be quite affordable if you are potentially planning for a substantial investment.

A common option is to start your model experimentation and early development in a public cloud, with a plan for an exit ramp with pre-defined triggers that will tell you if and when you should move the work home.

That includes understanding the benefits of the CSP’s machine learning services, and how you would replace them if you decide to move everything to your own hardware.

References

Lower Model Maintenance Cost by 40%

APAC AI can help lower your costs of maintaining your models by upwards of 40% using techniques like Quantization and Distillation, we’ve helped dozens of enterprises radically lower their model costs in production and the process is seamless and fluid, to lower your costs now schedule an discover call with me below: